12 Jan Agricultural Pattern Recognition with UAVs and Deep Learning

Tamara Todić

Junior Researcher, BioSense Institute

In the fields we often see some undesirable patterns, like weed clusters, planter skip, double plant, standing water or waterway. All of this has a significant effect on the amount and quality of yields, so there exists interest in detecting these patterns in order to take appropriate actions timely and that way reduce or even eliminate possible losses.

Images captured by drone are suitable for detection of these patterns. They provide a view from bird’s perspective, the image can be taken whenever it’s needed (no need to wait for the satellite to come over the field of interest), and the clouds are easier to avoid – drones fly on much lower altitudes than satellites.

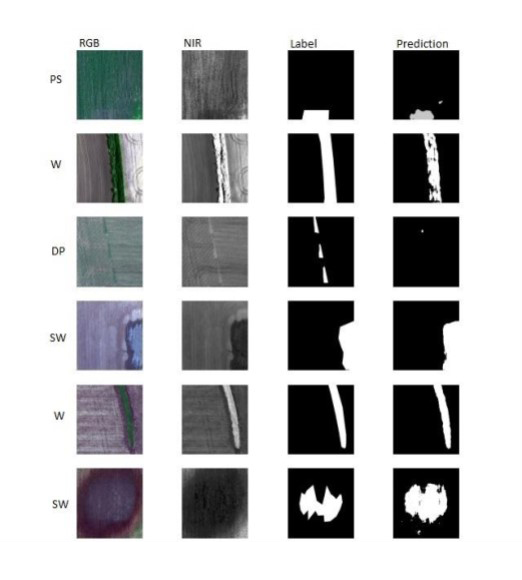

Example from the database

Database we used is given for the Agriculture-Vision Challenge that was organized for the first time by the CVPR conference in 2020. The example from the dataset is given on the image above. It consists of 12901 images of corn and soy fields from Illinois and Iowa territories. The images are captured by drone, from a bird’s perspective. They contain RGB and NIR (Near InfraRed) channels. As well as the visible part of electromagnetic spectra, NIR bands are used often in image processing in agriculture. It corresponds to the health of the plants – healthy plants have stronger reflectance in NIR bands than the unhealthy. All the images from the dataset are labeled by agronomy experts. There are 6 types of patterns present in the dataset:

• Cloud shadow

• Planter skip

• Double plant

• Standing water

• Waterway

• Weed cluster

For every image in the database there is a mask that shows valid pixels where no aberrations happened during the acquisition, as well as a mask that shows pixels of interest. When evaluating the model, only the valid pixels of interest are considered.

The problem was first solved for four out of six present classes:

• Planter skip

• Double plant

• Standing water

• Waterway

In the dataset there is the imbalance in the number of images per class – the most examples belong to weed clusters and cloud shadow classes. Because of that, these two classes were not included in the first solution.

Also there is a problem of very small areas of certain pattern classes, so that there is a very small number of anomaly pixels compared to the normal pixels, which makes detection even harder.

The problem is being solved using deep convolutional neural networks. More specifically, architectures for semantic segmentation are used, where every pixel is assigned to one of all possible classes. These networks have a huge number of parameters to be learned in the process of training. The training requires lots of time and memory resources. It is also necessary to get as many pictures as possible, because the model gives better results if trained on many different examples.

Some initial results are shown on the following image. The first column contains RGB images, the second NIR images, the third labels and the fourth model predictions.

Initial model results

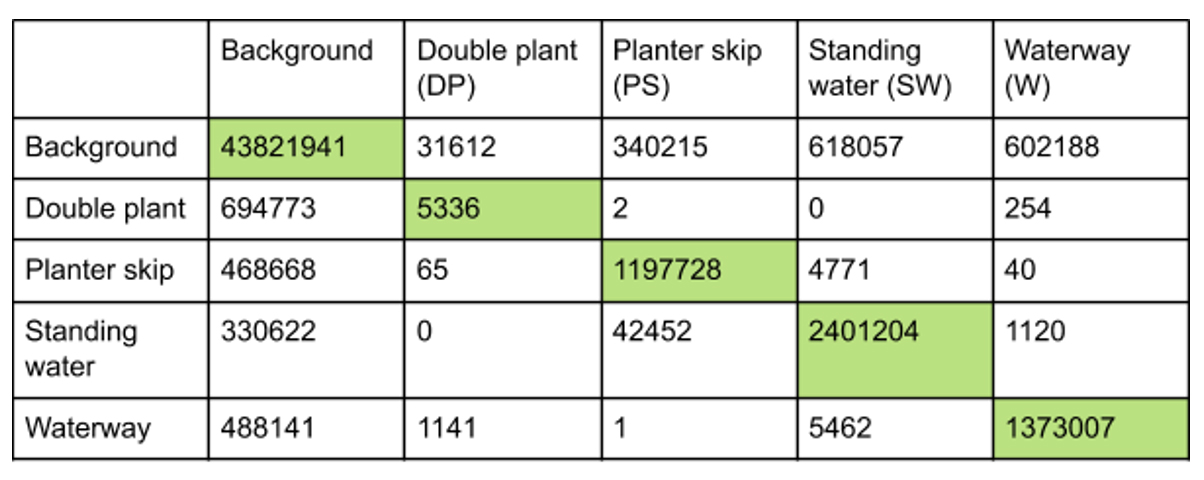

Confusion matrix

Confusion matrix is shown in the table above. The values represent the number of pixels, rows correspond to true classes and columns to predicted classes.

Further research goes towards including the two remaining classes in the model, as well as trying some other neural network architectures. Also we need to pay more attention to the segmentation of small area patterns, because that’s where most of the errors are made.

The goal is to integrate the system for agricultural pattern recognition into systems for yield prediction, in order to consider the effects that anomalies have when calculating yield, and that way increase the accuracy of predictions. Also, with a system like this, farmers could notice eventual oversights made during the season and correct them in order to decrease negative consequences as much as possible.